- the Procurement Blueprint

- Posts

- I'm sorry

I'm sorry

I should have come earlier

HEY AGAINI owe you a bit of an apology for the delay.Christmas and New Years in our house didn’t quite go to plan. Both kids were seriously ill with that bug that seems to be going around everywhere. Our holiday was therefore filled with high fevers, long nights and long days that felt more like firefighting than celebrating. Only small presents had already been wrapped in advance, so that’s what they got, and the rest waited until Día de los Reyes yesterday, which ended up being the real Christmas moment for us in Spain. Between looking after the kids, my husband, and keeping the dog vaguely convinced life was still half normal, something had to give. This time, it was the newsletter slipping by a couple of days. I mention this not to over-share, but because it mirrors today’s topics as well. Plans are neat on paper but reality rarely is. And the gap between the two is where judgement, trade-offs, and leadership actually show up. That thread runs through both pieces this week. The Quiet Fix looks at why AI regulation feels so confusing right now, and the Tech Bit explores how procurement technology has pushed many of us into leadership decisions we now cannot avoid. One quick nudge before we get into it: my AI in Procurement webinar is coming up soon, and it’s already filling up (over 220 of you already registered!). If this topic still has you with many questions and little clarity, I am hoping it gives you a grounded way of thinking about AI in procurement. |  |

In Today's Issue

The Quiet Fix

AI REGULATION & PROCUREMENT

I see a lot of procurement leaders unsure how deep they’re meant to go on AI regulation

Between draft laws, guidance, timelines that shift, and headlines that contradict each other AI regulation feels more complex than it needs to be.

The recent EU AI Act has sharpened this feeling.

It’s comprehensive, phased, and detailed, which is good for clarity in the long term, but in the short term it has left many of us unsure where to focus and what matters now versus later.

In my experience, most of the confusion comes from trying to track every rule, rather than understanding the intent behind them. Once you step back, the picture becomes far less chaotic than it first appears.

I see this uncertainty most often in procurement teams, who are suddenly expected to understand AI regulation well enough to question vendors, negotiate contracts, and assess risk, often without clear internal guidance.

I also think that technology has a habit of shaping behaviour long before we realise it is doing so.

We learned that lesson with social media and I think we are now relearning it with AI, only faster.

From what I’m seeing across organisations, AI systems are already influencing how people work, write, decide, and prioritise. That influence is not neutral, and it compounds quickly.

Regulation exists, in my view, for one reason: to put basic human guardrails in place while there is still time to do so deliberately.

What AI regulation is trying to change

Despite very different political approaches, most AI regulation is aiming at the same outcomes.

Based on how these frameworks are evolving, regulators are trying to:

Keep humans responsible for decisions that matter, especially where systems affect money, access, risk, or rights.

Force clarity about what AI systems actually do so organisations can understand where judgement sits, where automation applies, and where limits exist.

Create accountability when things go wrong rather than allowing responsibility to disappear into models, vendors, or “emergent behaviour”.

Reduce systemic harm before it becomes normalised. Bias, manipulation, large-scale error, and silent dependency are the risks I see regulators trying to contain.

The EU in my opinion has taken the clearest position so far, using a risk-based framework that imposes concrete requirements on higher-risk AI systems, including transparency, human oversight, and controls.

The UK has chosen a principles-led route, leaning on existing regulators and putting strong emphasis on keeping humans meaningfully involved in AI-driven processes.

The US remains more fragmented. From what I observe, the focus there is on enforcement, consumer protection, and liability, with a growing patchwork of federal guidance and state-level rules rather than one overarching law.

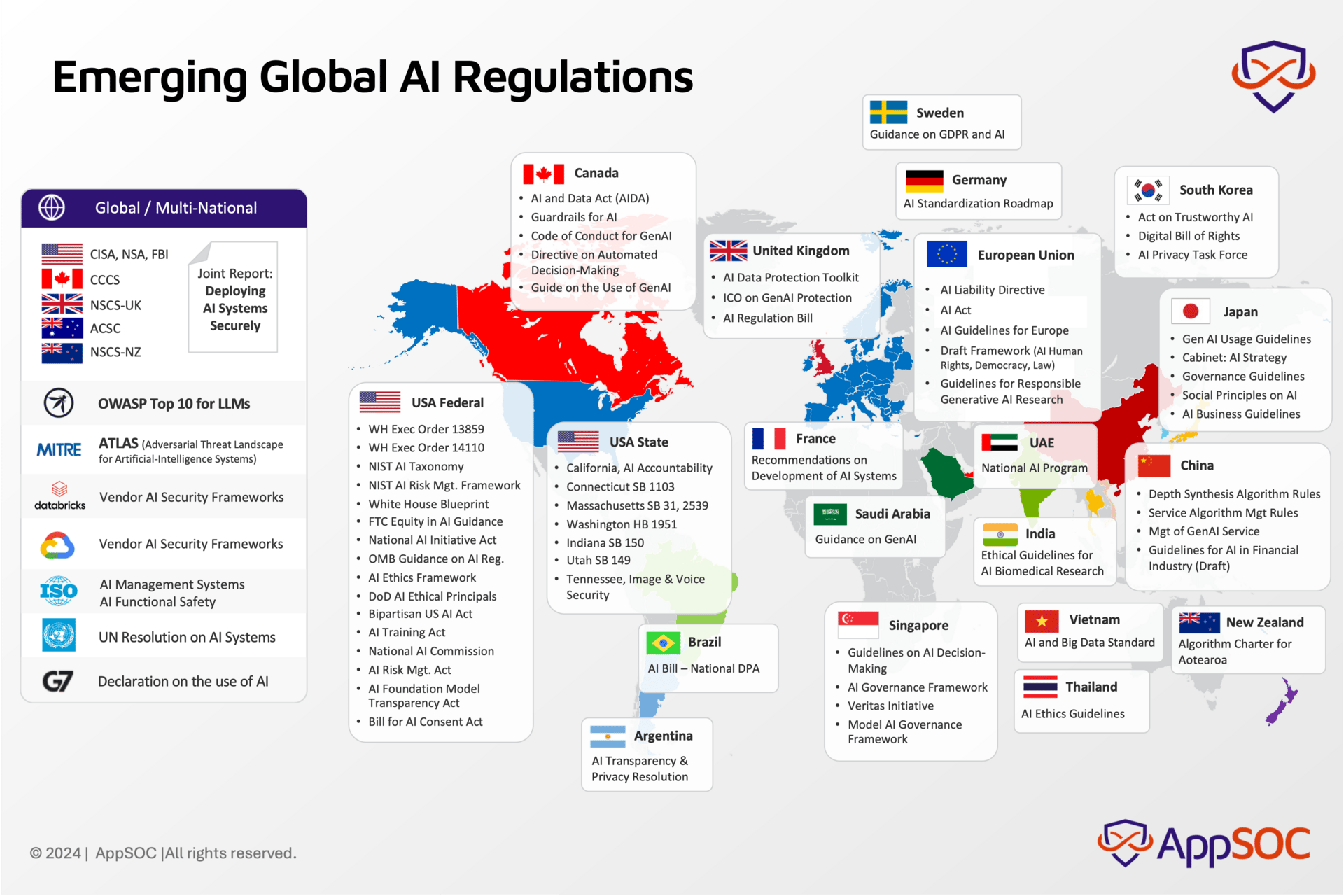

Globally, there is no single approach. Some countries rely on binding law, others on guidance and frameworks.

The chart referenced above (not my own, full credit given) gives a useful overview, with the caveat that it includes a mix of enacted rules, drafts, and guidance.

For procurement, the legal nuance matters less than the direction of travel.

What procurement professionals can do now

In practice, AI systems do not enter procurement organisations through policy documents. They arrive through vendors, platforms, and contracts. And I think this is where procurement slowly becomes one of the most influential functions in how AI is actually used.

Here are actions I would recommend teams start taking now.

1. Ask suppliers to describe exactly how AI is used in their product

Ask for a plain-language explanation covering:

what decisions the system makes or influences,

which outputs are recommendations versus automated actions,

where humans are expected to approve, override, or intervene.

If a supplier cannot explain this clearly, that is usually a signal worth paying attention to.

2. Require defined human approval points for sensitive use cases

Where systems affect supplier selection, pricing, access, compliance, or risk, I would:

specify which decisions require human sign-off,

ensure the workflow enforces this in practice,

confirm who is accountable for approvals internally.

3. Build transparency and audit rights into contracts

I personally find that this is often overlooked. Ask for:

access to logs or audit trails for AI-driven decisions,

documentation of known limitations and intended use,

notification of material model or logic changes.

You don’t need to understand the LLM model in any way, just the outcome.

4. Include AI-specific questions in due diligence and renewals

This should now be standard. Cover:

what data is used, stored, or reused for training or tuning,

how incidents or harmful outputs are reported and handled,

which third parties or model providers are involved.

5. Assume standards will rise

Even outside the EU, I am already seeing global vendors align to EU-style safeguards. Procurement teams can use that momentum rather than accepting weaker defaults.

6. Avoid deploying AI where oversight cannot work in practice

If a tool cannot support escalation, override, or post-decision review, I would be very cautious about using it for critical procurement decisions, regardless of how compelling their demo looks.

Please do not misunderstand me, I don’t believe any of this is about slowing innovation but rather keeping responsibility where it belongs.

Procurement teams have more influence here than they often realise.

Long before regulation is enforced, buying decisions determine whether AI supports human judgement or quietly replaces it.

From everything I’ve seen so far, this is one of those moments where small, practical choices made now shape outcomes we will live with for years.

The Tech Bit

HOW PROCUREMENT TECHNOLOGY MADE US LEADERS

I didn’t set out to become a “procurement technology person”.

Like most people in procurement leadership roles, I started by trying to fix very practical problems: fragmented spend, slow processes, unclear ownership, endless exceptions. Technology was supposed to help. And at first, it did.

Somewhere along the way, something changed.

Procurement platforms began deciding how requests entered the organisation, which suppliers were visible, how risk was surfaced, and which decisions required approval.

Those choices never stayed exclusively inside procurement.

They affected finance, legal, sustainability teams, and the business itself.

I slowly realised then that technology selection had become a leadership responsibility.

I’ve sat in tool demos where I could already see how the system would shape behaviour on a bad day.

When information was incomplete.

When urgency crept in.

When someone needed to bypass the “right” path to get work done.

Intake tools made this especially clear.

Some systems force every request through rigid paths that technically improve control but quietly teach the business to avoid procurement altogether. Others guide people towards compliant choices without slowing them down.

Both can solve intake, but only one really builds trust.

Supplier selection tools raised similar questions.

I’ve worked with a few platforms that very confidently rank suppliers and present a single “best” option, leaving little room for context or challenge. I’ve also experienced tools that surface trade-offs, risk signals, and alternatives, making it obvious that judgement still sits with a human.

Those are not feature decisions but think of them as leadership choices.

The same applies to approval workflows.

A system that blocks non-compliant purchases outright sends a very different signal from one that allows exceptions with explanation and traceability. Over time, those signals become habits. Habits become norms. And before long, the system defines how the organisation behaves.

What has surprised me most over time has been the side effects.

By taking responsibility for these decisions, procurement gains an incredible amount of internal influence.

When your choices determine how money flows, how risk is surfaced, and how people experience governance, you are already operating at leadership level.

Procurement technology forced us into that role.

How to approach procuretech decisions as a leader

Seeing technology selection as a leadership responsibility changes how you approach it.

Stay close to decisions that shape behaviour

Engage at the level of workflows, defaults, and escalation paths. These determine how the organisation behaves when things are urgent, unclear, or slightly outside policy.Evaluate tools based on lived experience

Walk through REAL scenarios. Pay attention to how the system behaves when information is incomplete or trade-offs are uncomfortable.Be deliberate about where judgement lives

Decide explicitly which decisions must remain human, particularly around supplier choice, pricing, access, and risk. Make sure the technology supports those choices rather than quietly overriding them.Test with the whole business not just the project team

Involve finance, legal, and operational stakeholders early. Their friction points will surface design issues that procurement alone might not see.Treat implementation as the start of stewardship

Watch adoption patterns, workarounds, and edge cases once the system is live. Adjust the technology when behaviour drifts, rather than assuming the problem sits with users.

From what I have seen, procurement leaders who approach technology this way gain influence naturally as they take responsibility for decisions that shape the organisation’s operating model.

Procurement technology has become one of the clearest expressions of how a company chooses to balance control, trust, and judgement.

Making those choices well now sits squarely in the remit of leadership.

And that, quietly, is how procurement earns its influence.

How much could AI save your support team?

Peak season is here. Most retail and ecommerce teams face the same problem: volume spikes, but headcount doesn't.

Instead of hiring temporary staff or burning out your team, there’s a smarter move. Let AI handle the predictable stuff, like answering FAQs, routing tickets, and processing returns, so your people focus on what they do best: building loyalty.

Gladly’s ROI calculator shows exactly what this looks like for your business: how many tickets AI could resolve, how much that costs, and what that means for your bottom line. Real numbers. Your data.

My Best Post Lately

PROCUREMENT WORK

I think this post resonated because there’s a persistent gap between how procurement is perceived and what the work actually involves. Much of the value happens under the hood and across decisions that reside within commercial judgement, risk management, and long-term thinking.

Procurement as a role doesn’t translate easily into a single outcome or metric, and that makes it easy to underestimate us.

This post didn’t try to elevate procurement or argue for its importance. I just showed the shape of the work as it really is. That clarity matters, especially in roles where the most important contributions are not easy to perceive until something goes wrong.

If you work in procurement, this probably felt familiar.

If you work with procurement, it might have explained a few things.

Free Template(s) of the week

MY PAYMENT TERMS CALCULATOR

I know how much you love a good story, so I will give you the one that goes along with this template.

This Excel came to me early in my consulting career, handed over by another peer procurement consultant as part of an internal training pack.

Just a spreadsheet that was used to explain why payment terms aren’t just “days on a contract” but a financing decision with real consequences.

At the time, I was junior enough to take it seriously, and experienced enough to notice how rarely anyone else did.

And I´ve kept using it in every single procurement role I’ve ever had.

Years later, I was opening some version of the same logic (which I had improved) in a meeting where my CFO was strongly pushing us to extend terms due to cashflow issues, and procurement was expected to roll it out without asking questions.

I’d been in enough supplier meetings by then to know that pushing a small supplier from 30 to 90 days is a real stress test.

Sometimes the numbers supported longer terms. Sometimes they didn’t. And sometimes they showed that even if the maths worked, the decision still carried an ethical cost a spreadsheet can’t measure.

That’s why I’ve held onto this tool for so long.

I’ve finally cleaned it up and made it shareable, with the explanations I usually give to my team in person when I hand it over.

I still love this spreadsheet because I strongly feel procurement needs more ways to balance cash, credibility, and supplier reality before decisions harden into policy.

Do you want access to other great templates from previous newsletters? Have a look at the full store below:

A Final Note

These past few weeks I have been thinking of a quote that I´ve carried with me since Stranger Things Season 1:

“Science is neat, but I’m afraid it’s not very forgiving.”

Mr Clarke

Did anyone else watch the finale? It was emotional and heartbreaking… satisfying, and thoughtful, precisely because it didn’t shy away from ambiguity even while giving closure.

That feels close to how I’ve come to think about AI in Procurement. Because science, including applied science like AI, doesn’t forgive indifference or sloppy judgement.

There’s an important difference between saying “AI is exciting” and saying “AI should be shaped by human values and responsibility.” The latter gives us clarity about what actually matters.

We’ll be back in your inbox Monday the 19th, sharp and on rhythm, with more grounded thinking without delay this time, just real conversations about where procurement is heading and how to navigate it with judgement.

Until then, I hope the new year feels purposeful without pressure.

Until next time,

Procurement worth reading.